ChrisR wrote:Would that be "it"? Possibly not. That Oly 135 is an old lens design - I didn't think mine was particularly sharp.

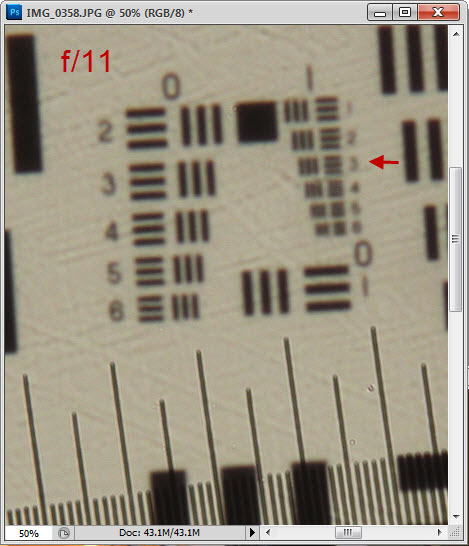

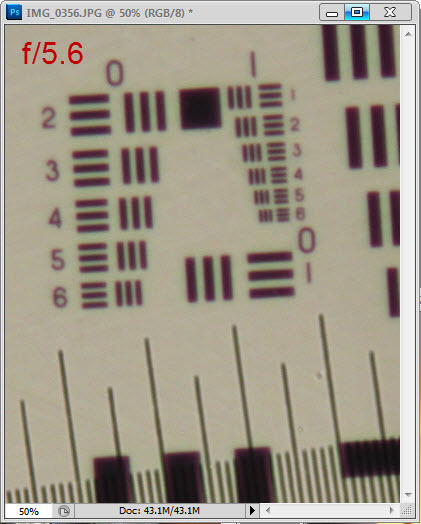

Oh, I didn't mean to imply that f/11 was as sharp as it gets. Heavens no! Here's the f/11 image again, and then the matching f/5.6 that I shot at the same time.

So, um, let's see, that's another 3 elements at least, sqrt(2) on each axis, double the pixel count... I think we're up to 96 megapixels now, with lots more room to go still higher from there!

----------------

I've spent quite some time this evening looking at images and reading the cambridgeincolour page. I think I now understand what's going on.

Cambridgeincolour is concerned with a question commonly posed by users:

"What apertures can I use, given the sensor I have?" For example their number labeled "Diffraction Limits Standard Grayscale Resolution" reflects the aperture at which artifact-free resolution begins to be impacted.

In contrast, I'm answering a different question:

"What sensor would I need, to capture all the detail in the image formed by my optics?" The answer to that question is 3 pixels per line pair for the finest lines resolved in the optical image.

When you work the numbers, it turns out that the answer to my question is a much higher pixel count than you'd get by working backward from cambridgeincolour's answer to their question.

The issue of sensor quality is interesting. Some analyses are based on the minimum sampling rate of 2 pixels per line pair that's implied by Shannon's sampling theorem. But as I've illustrated

HERE, it turns out that you really need about 3 pixels per line pair to avoid degrading detail that happens to be positioned badly with respect to pixel boundaries,

even with a perfect sensor. So personally, I'm inclined to think that sensors should be evaluated on their performance at 3 pixels per line pair, not 2.

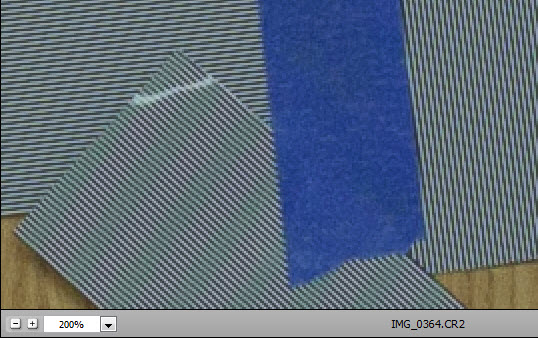

It turns out that the physical sensor in my Canon T1i actually does a pretty good job at 3 pixels per line pair. Here's a sample, processed with Adobe Camera Raw 6.3 and shown here at 200%. In the slanting lines, there's some periodic loss of contrast accompanied by color shift, but still there's no question that the lines are resolved.

So, while I would certainly applaud further improvement in sensor quality, the current one is already pretty good under the conditions where I would want to use it anyway.

OK, let's recap. We got to this discussion because I claimed there was a lot more information in an f/10 image than my 15 mp APS sensor could capture. That prompted a dissent from seta666, who wrote that f/10 "is around the limit to get the most of a 10mpx APS-C sensor". Subsequent discussion referenced the cambridgeincolour

diffraction calculator, which provides numbers like these:

For a 15mpx x1.6 APS-C sensor (Canon) values are:

Diffraction May Become Visible f/7.1

Diffraction Limits Extinction Resolution f/8.9

Diffraction Limits Standard Grayscale Resolution f/10.7

OVERALL RANGE OF ONSET f/7.1 - f/10.7

A quick reading of these numbers may suggest that the sensor can capture everything there is to see in an image at f/11. However, the experimental images tell a different story. It may be true that f/11 noticeably adds degradation to an image that has already been degraded by the 15 mp sensor. However, there remains much finer detail in an f/11 image than a 15 mp sensor can capture. Cambridgeincolour is simply answering a different question than I am.

I would again like to call everyone's attention to a basic fact of digital sampling. This is discussed in detail

HERE, but to quickly summarize:

In order for our digital image to "look sharp", we have to shoot it or render it at a resolution that virtually guarantees some of the detail in the optical image will be lost. If you see some tiny hairs just barely separated at one place in the digital image, it's a safe bet that there are quite similar tiny hairs at other places that did not get separated, just because they happened to line up differently with the pixels.

Conversely, in order to guarantee that all the detail in the optical image gets captured in the digital image, we have to shoot and render at a resolution that completely guarantees the digital image won't look sharp.

So, there's "sharp" and there's "detailed" -- pick one or the other 'cuz you can't have both. What a bummer!

I happen to be a "detailed" guy, so I like lots of pixels. If you're a "sharp" guy, you'll prefer fewer. They both work.

Thanks for the stimulating discussion.

BTW, I think I will split off this discussion of resolution to its own thread. That will be a bit disruptive now, but it will make a lot more sense later.

--Rik