Several weeks ago I was looking at deconvolution for heavily diffraction limited images. So I have some test samples just sitting about here.

Deconvolution is very effective for astrophotography and microscopy. In astro photography they have an array of point sources they can try to infer the PSF from, making their life easy. In addition the subjects have essentially no depth.

For microscopy we can divide the options into 2d and 3d deconvolution. 3d deconvolution generally uses a pre-measured 3d PSF and applies it to a stack of images of different depths to try to create a more detailed and higher contrast image. 2d deconvolution can either take a pre-measured PSF or some combination of blind deconvolution or a synthetic PSF and apply it to a single image.

It would be interesting if in the future we could apply aspects of 3d deconvolution to image stacking, since they share a little in common. I suspect that there would be quite a few challenges though. Processing speed, vastly different subjects(especially in transparency and depth), not having premeasured PSF for optics, etc.

But for right now, we are interested in 2d deconvolution, where we have few to no good areas in the image to try and measure the PSF like in astrophotography.

Also there is the question of, when to sharpen. Before you stack? After you stack? I suspect that sharpening before will have an impact on how some details are stacked using dmap. Sharpening before stacking with pmax should obviously impact the amount of images noise as well. While I havent got to testing this directly, we will get to see a bonus effect of sharpening before stacking in just a second!

All images here were stacked in zerene using pmax. The sensor was 22.5mm wide(and quite dusty at the time

The first set is testing sharpening and deconvolution before stacking. Deconvolution was done using a program called piccure+, since it can batch process images.

No Sharpening:

Sharpening before stacking with lightroom:

Sharpening before stacking with Piccure+:

Fullsized images of this set are here: http://www.thelostvertex.com/uploads/pm ... ngFull.zip

If you took the time to flash between these images with new tabs or by downloading them, you will have noticed something interesting. They dont line up! I think the no sharpening image looks worst, and the piccure image looks best.If you download the fullsized images and flash between them, you will notice the alignment seems change at the same rate as image sharpness/contrast with NoSharpening>Lightroom>Piccure. Hopefully Rik weighs in his thoughts on this, since I didnt expect to see that.

The next series of images is looking at various deconvolution software after stacking was completed using the previous "no sharpening" image. I also will include a couple images sharpened with a USM matched to a deconvolution software.*

No sharpening:

Deconvolution with Focus Magic:

Unsharp mask matched to Focus Magic:

Deconvolution with Piccure+

Unsharp mask matched to Piccure+:

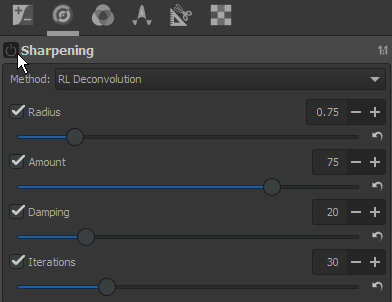

Deconvolution with RawTherapee(from nosharpen jpeg to jpeg):

Fullsized images of this set are here: http://www.thelostvertex.com/uploads/pm ... ngFull.zip

Ive done other tests and this seems pretty representative of what I have seen. Rawtherapee does not do very well and offers limited control (max radius is 2.5px, and this is the best I could do to show some change in detail while limiting artifacts on such a heavily diffraction limited image). Focus magic does ok here. On some images I have tested it has done a little better than this, and some a bit worse. The unsharp mask paired with Focus Magic is certainly a lot noisier. Piccure seemed the best from this set to me. In every test I have done so far between piccure and focus magic, piccure seems to have done as well or slightly better. Again the unsharp mask paired against it is a fair bit noisier and doesnt look quite as good.

I have done a several other images with the previously mentioned softwares(and a couple others), and in blind testing my brother, he always chose the deconvolved image as looking better to him. So atleast for very heavy diffraction, I see some potential.

Ill stop here and not rant about how much I do not like any of the deconvolution software that is out there, and how poorly designed it all...I'll stop

*Comparisons where made by opening the deconvolved image as a layer, and the unsharpened image on top. The unsharpened image blend mode was set to difference, and an unsharp mask live filter was applied to it, then adjusted until it matched as close as possible to the deconvolved image. The hope here is to get as close to an apples to apples comparison as I can